How to do mesh-valued regression?#

[1]:

import numpy as np

import pyvista as pv

from matplotlib import pyplot as plt

from sklearn.decomposition import PCA

from sklearn.linear_model import LinearRegression

from sklearn.metrics import r2_score

from sklearn.preprocessing import FunctionTransformer, StandardScaler

import polpo.preprocessing.dict as ppdict

import polpo.preprocessing.pd as ppd

from polpo.models import ObjectRegressor

from polpo.plot.pyvista import RegisteredMeshesGifPlotter

from polpo.preprocessing import Map

from polpo.preprocessing.learning import DictsToXY

from polpo.preprocessing.load.pregnancy.jacobs import MeshLoader, TabularDataLoader

from polpo.preprocessing.mesh.conversion import ToVertices

from polpo.preprocessing.mesh.registration import RigidAlignment

from polpo.sklearn.adapter import AdapterPipeline

from polpo.sklearn.mesh import BiMeshesToVertices

from polpo.sklearn.np import BiFlattenButFirst, FlattenButFirst

[KeOps] Warning : cuda was detected, but driver API could not be initialized. Switching to cpu only.

[2]:

STATIC_VIZ = True

if STATIC_VIZ:

pv.set_jupyter_backend("static")

Loading meshes#

[3]:

subject_id = "01"

file_finder = MeshLoader(

subject_subset=[subject_id],

struct_subset=["L_Hipp"],

as_mesh=True,

)

prep_pipe = RigidAlignment(max_iterations=500)

pipe = file_finder + ppdict.ExtractUniqueKey(nested=True) + prep_pipe

meshes = pipe()

Loading tabular data#

[4]:

subject_id = "01"

pipe = TabularDataLoader(subject_subset=[subject_id], index_by_session=True)

df = pipe()

INFO: Data has already been downloaded... using cached file ('/home/luisfpereira/.herbrain/data/maternal/maternal_brain_project_pilot/rawdata/28Baby_Hormones.csv').

Here, we filter the tabular data.

[5]:

session_selector = ppd.DfIsInFilter("stage", ["post"], negate=True)

predictor_selector = (

session_selector + ppd.ColumnsSelector("gestWeek") + ppd.SeriesToDict()

)

[6]:

x_dict = predictor_selector(df)

Merge data#

We get the data in the proper format for fitting

[7]:

dataset_pipe = DictsToXY()

X, meshes_ = dataset_pipe((x_dict, meshes))

X.shape, len(meshes_)

[7]:

((19, 1), 19)

Create and fit regressor#

Follow How to perform dimensionality reduction on a mesh?, we create a pipeline to transform the output variable.

[8]:

pca = PCA(n_components=4)

objs2y = AdapterPipeline(

steps=[

BiMeshesToVertices(index=0),

FunctionTransformer(func=np.stack),

BiFlattenButFirst(),

StandardScaler(with_std=False),

pca,

],

)

Tip: polpo.models.Meshes2FlatVertices is syntax sugar for the code above.

[9]:

model = ObjectRegressor(LinearRegression(fit_intercept=True), objs2y=objs2y)

[10]:

model.fit(X, meshes_)

[10]:

ObjectRegressor(objs2y=AdapterPipeline(steps=[('step_0', BiMeshesToVertices()),

('step_1',

FunctionTransformer(func=<function stack at 0x7f7f9d6bd930>)),

('step_2', BiFlattenButFirst()),

('step_3',

StandardScaler(with_std=False)),

('step_4', PCA(n_components=4))]))In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Parameters

| objs2y | AdapterPipeli...mponents=4))]) |

Parameters

| steps | [('step_0', ...), ('step_1', ...)] |

Parameters

| step | <built-in function asarray> |

Parameters

| step | <function atl...x7f7f9d6bd1f0> |

Parameters

| regressor | LinearRegression() | |

| transformer | AdapterPipeli...mponents=4))]) | |

| func | None | |

| inverse_func | None | |

| check_inverse | False |

LinearRegression()

Parameters

| fit_intercept | True | |

| copy_X | True | |

| tol | 1e-06 | |

| n_jobs | None | |

| positive | False |

Parameters

| index | 0 |

Parameters

| func | <function sta...x7f7f9d6bd930> | |

| inverse_func | None | |

| validate | False | |

| accept_sparse | False | |

| check_inverse | True | |

| feature_names_out | None | |

| kw_args | None | |

| inv_kw_args | None |

Parameters

Parameters

| copy | True | |

| with_mean | True | |

| with_std | False |

Parameters

| n_components | 4 | |

| copy | True | |

| whiten | False | |

| svd_solver | 'auto' | |

| tol | 0.0 | |

| iterated_power | 'auto' | |

| n_oversamples | 10 | |

| power_iteration_normalizer | 'auto' | |

| random_state | None |

Evaluate fit#

model.predict outputs meshes, but we know LinearRegression sees PCA components. We can evaluate r2_score by applying transform.

NB: these are values on the training data.

Tip: objs2y is available in model.objs2y.

[11]:

meshes_pred = model.predict(X)

y_true = objs2y.transform(meshes_)

y_pred = objs2y.transform(meshes_pred)

r2_score(y_true, y_pred, multioutput="raw_values")

[11]:

array([0.04356305, 0.00644153, 0.24583461, 0.00145247])

[12]:

r2_score(y_true, y_pred, multioutput="uniform_average")

[12]:

0.07432291550369732

This shows the model performs poorly. (NB: the goal of this notebook is not to find a great model, but to show how the analysis can be performed. Adapting the pipeline to use different models is a no-brainer.)

The analysis can also be done at a mesh level. The following assumes Euclidean distance.

[13]:

meshes2flatvertices = Map(ToVertices()) + np.stack + FlattenButFirst()

r2_score(

meshes2flatvertices(meshes_),

meshes2flatvertices(meshes_pred),

multioutput="uniform_average",

)

[13]:

0.04844193363625151

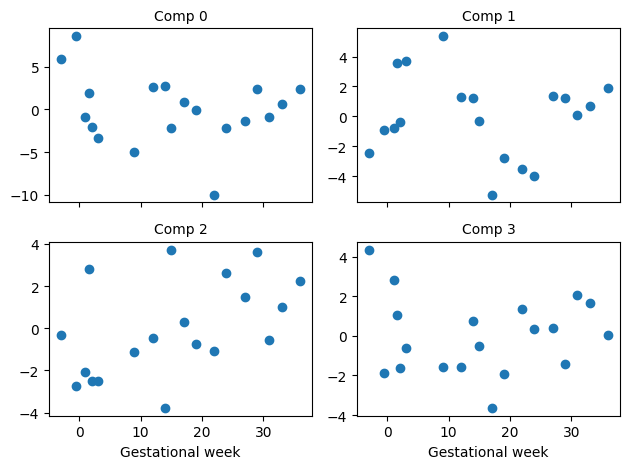

To build understanding, let’s plot the data the model actually “sees”.

[14]:

_, axes = plt.subplots(2, 2, sharex=True)

for index in range(4):

ax = axes[index // 2][index % 2]

ax.scatter(X[:, 0], y_true[:, index])

ax.set_title(f"Comp {index}", fontsize=10)

if index > 1:

ax.set_xlabel("Gestational week")

plt.tight_layout()

Visualize predictions#

[15]:

X_pred = np.linspace(-3, 42, num=10)[:, None]

meshes_pred = model.predict(X_pred)

[16]:

pl = RegisteredMeshesGifPlotter(fps=3)

pl.add_meshes({int(x): mesh for x, mesh in zip(X_pred[:, 0], meshes_pred)})

pl.close()

pl.show()

[16]:

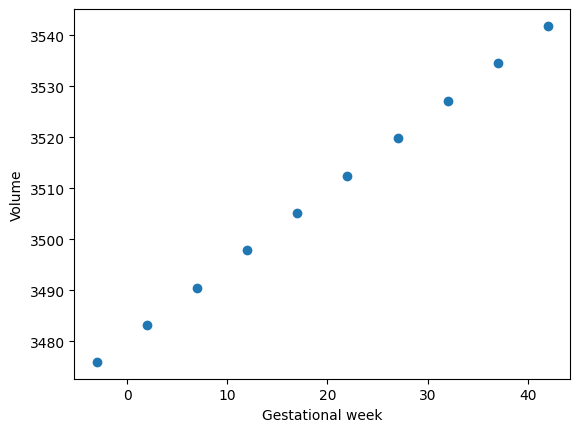

Let’s check the predicted volumes.

[17]:

volumes = [mesh.volume for mesh in meshes_pred]

plt.scatter(X_pred, volumes)

plt.xlabel("Gestational week")

plt.ylabel("Volume");