TAG-DS TDL Challenge 2025#

Welcome to the Topological Deep Learning Challenge 2025: Expanding the Data Landscape, sponsored by Arlequin AI and hosted at the first Topology, Algebra, and Geometry in Data Science (TAG-DS) Conference.

Organizers: Guillermo Bernardez, Lev Telyatnikov, Mathilde Papillon, Marco Montagna, Miquel Ferriol, Raffael Theiler, Olga Fink, Nina Miolane

See also

Link to the challenge repository: geometric-intelligence/TopoBench.

🏆 Winners#

Category A.1: Broadening Benchmarks

Winner: Amiiza team. Their implementation of ATLAS Top Tagging brings a novel benchmark from the particle physics community into the TDL ecosystem.

Honorable Mentions: MappingComplexityLab for the HypBench Dataset and Loris for the Graphland Benchmark.

Category A.2: Natively Higher-Order Datasets

Winner: Hugo Walter. He implemented the Cornell LabeledNodesDataset, consisting of 8 single-labeled hypergraph datasets, representing a significant step forward for hypergraph benchmarking.

Honorable Mentions: IgPA for the Conjugated Molecule dataset and TJPaik for Analog Circuits implementations.

Category B.1: Large-Scale Inductive Infrastructure

Winner: DLLB team. This team successfully tackled the challenge of migrating to

OnDiskDatasetsupport in the transductive setting.Honorable Mention: DLLB team. The team received a special acknowledgement for their impressive strides in scalable data loading for the inductive setting as well.

Category B.2: Pioneering New Benchmark Tasks

Winner: DLLB team. They introduced Link Prediction to the benchmark suite, establishing a new standard for the field.

Honorable Mention: NeuroTriangles team. Recognized for proposing higher-order cell tasks on a neuroscience dataset.

Motivation#

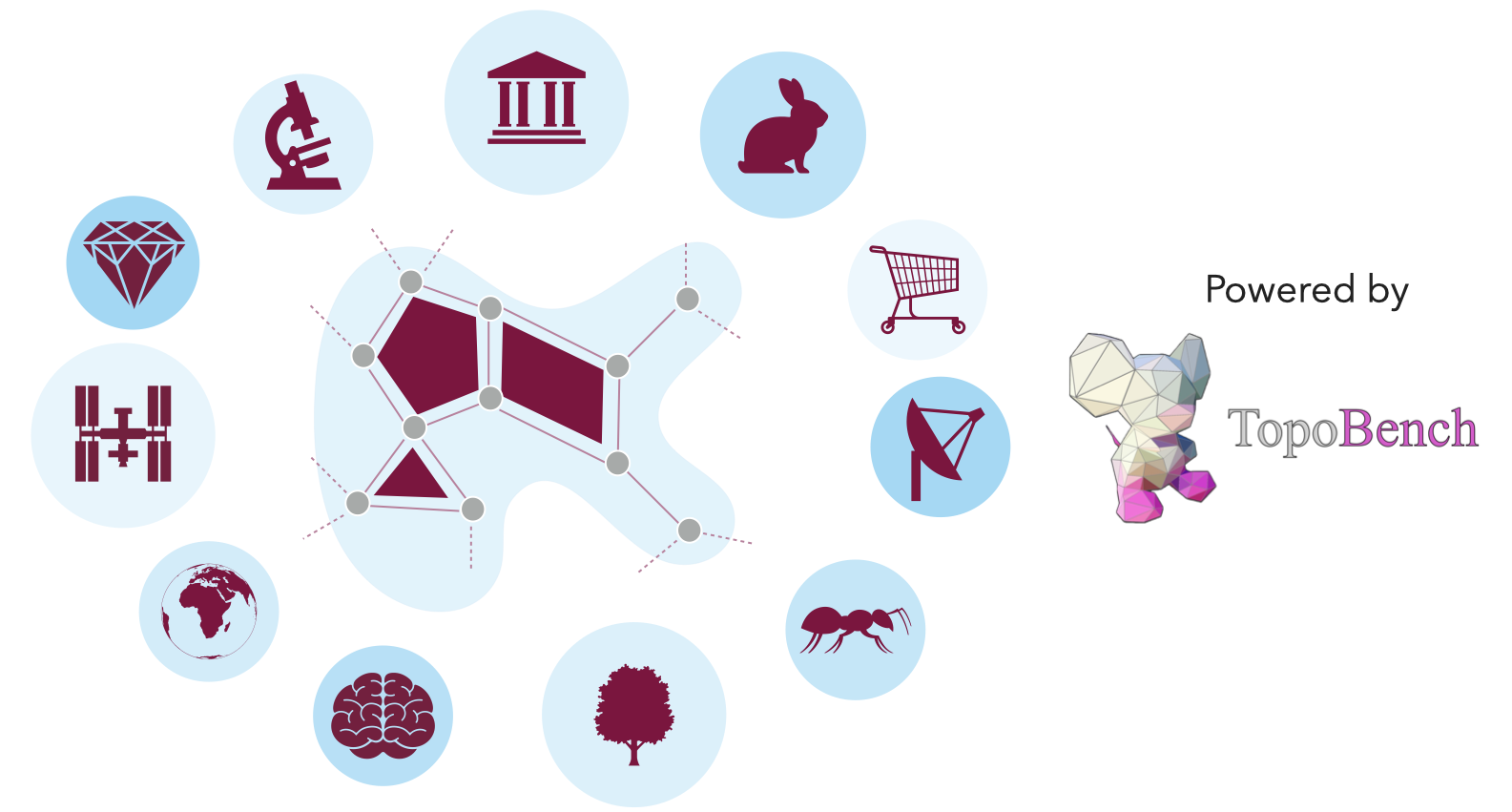

Topological Deep Learning (TDL) is emerging as a powerful paradigm for analyzing data with topological neural networks, a class of models that extends Graph Neural Networks (GNNs) to capture interactions beyond simple pairs of nodes. While GNNs model pairwise links—like friendships in a social network—many systems involve higher-order relationships, such as six atoms forming a ring in a molecule or multiple researchers co-authoring a paper in a co-authorship network. TDL provides a principled framework to represent and learn from these richer, multi-way relationships, making it especially suited for complex data in science and engineering.

TopoBench is the premier open-source platform for developing and benchmarking TDL models. It provides a unified interface for datasets, loaders, and tasks across topological domains. TopoBench enables reproducible comparisons, accelerates model development, and serves as the central hub for TDL experimentation.

For TDL to realize its full potential, the data landscape must evolve (Papamarkou et al., 2024). The field’s current reliance on a handful of well-worn benchmarks creates a bottleneck for innovation and rigorous evaluation. To propel the field forward, we focus on the following fronts:

Broadening: the foundational benchmarks of TDL by adapting diverse datasets from the GNN community and beyond.

Deepening: TDL capabilities by building infrastructure for large-scale datasets and novel benchmark tasks.

We are calling on the community to build a richer, more robust, and scalable data ecosystem for TDL. By participating, you will be paving the way for the next generation of topological models.

Description of the Challenge#

The 2025 Topological Deep Learning Challenge is organized into two primary missions designed to systematically expand the TDL ecosystem: expanding the data landscape and advancing the core data infrastructure. Participants are invited to contribute one dataset (or dataset class, such as TUDataset) per submission, where each submission belongs to one category. For examples of datasets to contribute, please refer to the Reference Datasets Table below.. We also welcome other datasets, either existing or novel (as long as they are hosted on an open-source platform).

We invite participants to review this webpage regularly, as more details might be added to answer relevant questions.

Mission A — Expanding the Data Landscape#

This mission focuses on enriching the TDL ecosystem with a diverse and meaningful collection of datasets.

Category A.1: Broadening Benchmarks with Graphs & Point Clouds#

(Difficulty: Easy/Medium)

Implement a well-established graph or point cloud dataset. Expand TDL’s applicability to new fields. Alternatively, help bridge the gap between TDL and mainstream GNN research by incorporating the challenging benchmarks used to test modern graph models. TopoBench includes a suite of topological transforms that will be able to “lift” these graph-based or point cloud datasets to higher-order domains, such as hypergraphs or simplicial complexes.

Category A.2: Curating Natively Higher-Order Datasets#

(Difficulty: Medium)

Integrate datasets where higher-order structures (e.g., simplicial complexes, hypergraphs) are a native feature of the data, rather than being algorithmically inferred by transforms in TopoBench. These datasets are critical for testing the unique expressive power of topological models.

Mission B — Advancing the Data Infrastructure#

Category B.1: Developing Large-Scale Inductive Data Infrastructure#

(Difficulty: Hard)

This mission focuses on building the scalable and versatile software tools needed for next-generation TDL research. It challenges participants to engineer a scalable data loading pipeline for large-scale inductive learning settings, accommodating datasets with either many small graphs or a few giant ones.

The primary obstacle is that standard InMemoryDataset loaders often fail when

preprocessing large datasets—especially during memory-intensive “lifting”

operations that construct higher-order topological domains. This process can

easily exhaust system RAM, leading to crashes.

Core task. Implement a robust OnDiskDataset loader that processes and saves

each sample to disk individually, effectively bypassing memory bottlenecks and

enabling datasets that were previously intractable.

Bonus (Difficulty: Survival)

Tackle large-scale transductive learning with an OnDiskDataset loader.

This is significantly more complex and requires a deep understanding of both

TDL pipelines and the TopoBench architecture. If interested, please contact

the organizers (see Questions section) for details.

Category B.2: Pioneering New TDL Benchmark Tasks#

(Difficulty: Varies)

Introduce novel benchmark tasks beyond standard node-level classification. Each submission must pair a dataset with the infrastructure needed to establish it as a new benchmark, including data splits, evaluation metrics, and a baseline model.

Example topics:

Link prediction in hypergraphs

Higher-order cell classification or regression

Hyperedge-dependent node classification

Your own creative proposal

Reward Outcomes#

⭐ White paper: Every submission that meets the requirements will be included in a white paper summarizing the challenge’s findings (planned via PMLR through Topology, Algebra, and Geometry in Data Science 2025). Authors of qualifying submissions will be offered co-authorship. [1]

🏆 Cash prizes: Four winning teams (one per category) will be announced at TAG-DS 2025 during the Awards Ceremony.

💰 Mission A winners: $200 USD each (sponsored by Arlequin AI).

💰 Mission B winners: $800 USD each (sponsored by Arlequin AI).

🌴 Research internship — Geometric Intelligence Lab, UCSB (USA): A team, pending evaluation results and interest, will be invited for a visit of up to two months at the Geometric Intelligence Lab, University of California, Santa Barbara. During the visit, winners will work on cutting-edge methods for TDL. Travel costs will be reimbursed and financial assistance for lodging will be provided. Remote participation is also available. [2]

🏔️ Research internship — IMOS Lab, EPFL (Switzerland): A team, pending evaluation results and interest, will be invited for a research internship at the Intelligent Maintenance and Operations Systems (IMOS) Lab at EPFL in Lausanne, Switzerland. Winners will develop TDL methods in a world-class academic environment. MSc enrollment at the time of the internship is required. Financial assistance for lodging will be provided; winners will likely need to secure a visa and work authorization.

Note

Organizers reserve the right to reallocate prize money between categories in the event of a significant disparity in the number or quality of submissions.

Deadline#

The final submission deadline is November 25th, 2025 (AoE). Participants may continue modifying their PRs until this time.

Guidelines#

Eligibility: Participation is free and open to all. However, for legal reasons, individuals affiliated with institutions that appear in the sections of the Restricted Foreign Research Institutions list are not eligible for the reward outcomes of the challenge — including co-authorship on the white paper summarizing the challenge findings and submissions.

Registration: To participate in the challenge, participants must (1) open a Pull Request (PR) on TopoBench and (2) fill out the Registration Google Form with PR and team information. Each submission (i.e., each PR) must be accompanied by a Registration Form to be valid.

Picking a dataset: Please refer to the open Pull Requests in TopoBench (see open PRs) to see which datasets are already being implemented in each category.

Submission: For a submission to be valid, teams must:

Submit a valid PR before the deadline.

Fill out the registration form before the deadline.

Ensure the PR passes all tests.

Tag the PR with the appropriate category (one of:

category-A1,category-A2,category-B1,category-B2).Respect all submission requirements.

Dataset loaders:

Each PR may contain at most one dataset loader.

A loader may support multiple datasets, but in such cases, the PR should include separate configuration files for each dataset. (Example: TopoBench uses the “TUDataset” class from PyG and provides multiple configuration files for its different datasets.)

Teams:

Teams are allowed, with a maximum of 2 members. (If you wish to form a larger team, please contact the organizers — see the Questions section — for discussion and approval.)

The same team can submit multiple datasets through different PRs. Make sure to register on the Google Form (link) for each PR.

The same team can participate in multiple challenge categories.

Early submissions:

We encourage participants to submit PRs early. This allows time to resolve potential issues.

In cases where multiple high-quality submissions cover overlapping datasets, earlier submissions will be given priority consideration.

Submission Requirements#

A submission consists of a Pull Request (PR) to TopoBench. The PR title must be:

Category: [A1|A2|B1|B2]; Team name: <team name>; Dataset: <Dataset Name>

Submissions may implement datasets already proposed in the literature or novel datasets. For novel datasets, neither the challenge nor its related publications will prevent you from publishing your own work — you retain full credit for your data. We only ask that the data be hosted on an open-source platform.

Requirements for Mission A (Categories A1 and A2)#

{name}_dataset_loader.pyor{name}_datasets_loader.pyStore in

topobench/data/loaders/{domain}where{domain}is the dataset’s domain (e.g.,graph).Define a class

{Name}DatasetLoaderimplementingload_dataset()that loads the entire dataset (optionally with pre-defined splits).This class must inherit from

data.loaders.base.AbstractLoader.

(Only if necessary)

{name}_dataset.pyor{name}_datasets.pyStore in

topobench/data/datasets.Define a class

{Name}implementing the required interfaces forInMemoryDataset. See existing examples in the directory for the required functions.

Testing

All contributed files must pass pre-existing unit tests in

test/data.Any contributed methods that are not currently tested must be accompanied by new test files stored in the appropriate subdirectory of

test/data. Each contributed test file should include test functions that correspond one-to-one with contributed functions (as in existing tests).TopoBench uses Codecov to measure coverage. Your PR must match or exceed 93% coverage. You can find the Codecov report as a bot comment on your PR after CI runs.

Include a pipeline test showing that a model (any model already in TopoBench) can train on your dataset. Specifically, fill out

test/pipeline/test_pipeline.pywith a dataset and model configuration of your choice to prove the pipeline runs successfully. We do not evaluate submissions by training performance.

Tip

For a step-by-step example, see the tutorial: tutorial_add_custom_dataset.ipynb.

Requirements for Mission B (Categories B1 and B2)#

This mission may require significant contributions and modifications to TopoBench, so explicit, exhaustive requirements are not feasible. Participants are encouraged to follow the general structure and logic of TopoBench as much as possible; however, given the complexity of these tasks, this constraint is flexible.

Minimum expectations

Coverage: Match or exceed the existing 93% Codecov coverage target.

Testing: Include at least one pipeline test demonstrating that a model (either existing or newly contributed) can train on the contributed dataset/task for a limited number of epochs (see point 3.d of the Mission A submission requirements for an example).

Coordination and support

We are happy to discuss implementation and testing ideas. Please reach out at

topological.intelligence@gmail.com.

Note

For very large datasets (Mission B1), write tests that operate on small slices of the data to keep CI/runtime reasonable and avoid lengthy tests.

Evaluation#

Award Categories#

One winning submission will be crowned per mission category:

Category A.1: Best “Expanding Graph-Based Datasets” implementation

Category A.2: Best “Expanding Higher-Order Dataset” implementation

Category B.1: Best “Large-Scale Inductive Dataset” implementation

Category B.2: Best “New TDL Benchmark Task” implementation

Additionally, two teams will be selected for invited visits across all mission categories based on overall quality, level of difficulty, and impact of contribution. Honorable mentions may also be awarded. Exceptional attention will be given to any successful B1 Bonus submission.

Evaluation Procedure#

A panel of TopoBench maintainers and collaborators will vote using the Condorcet method to select the best submission in each mission category.

Evaluation criteria include:

Correctness: Does the submission implement the dataset correctly? Is it reasonable and well-defined?

Code quality: How readable and clean is the implementation? How well does the submission respect the requirements?

Documentation & tests: Are docstrings clear? Are unit tests robust?

Important

These criteria do not reward final model performance on the dataset. The goal is to deliver well-written, usable datasets/infrastructure that enable further experimentation and insight.

A panel of TopoBench developers and TDL experts will decide on the two teams to be invited for visits, pending interest as indicated in their Registration Forms. Internship opportunities and cash prizes are not mutually exclusive.

Questions#

Feel free to contact the organizers at topological.intelligence@gmail.com.

Important

👉 Now live: Join us in expanding the data landscape of Topological Deep Learning!